I recently came across a statement from the CEO of Palantir that really made me think. He said that a surveillance state is preferable to China winning the AI race. At first, this sounds like a pretty extreme view, but it’s worth considering the context and implications.

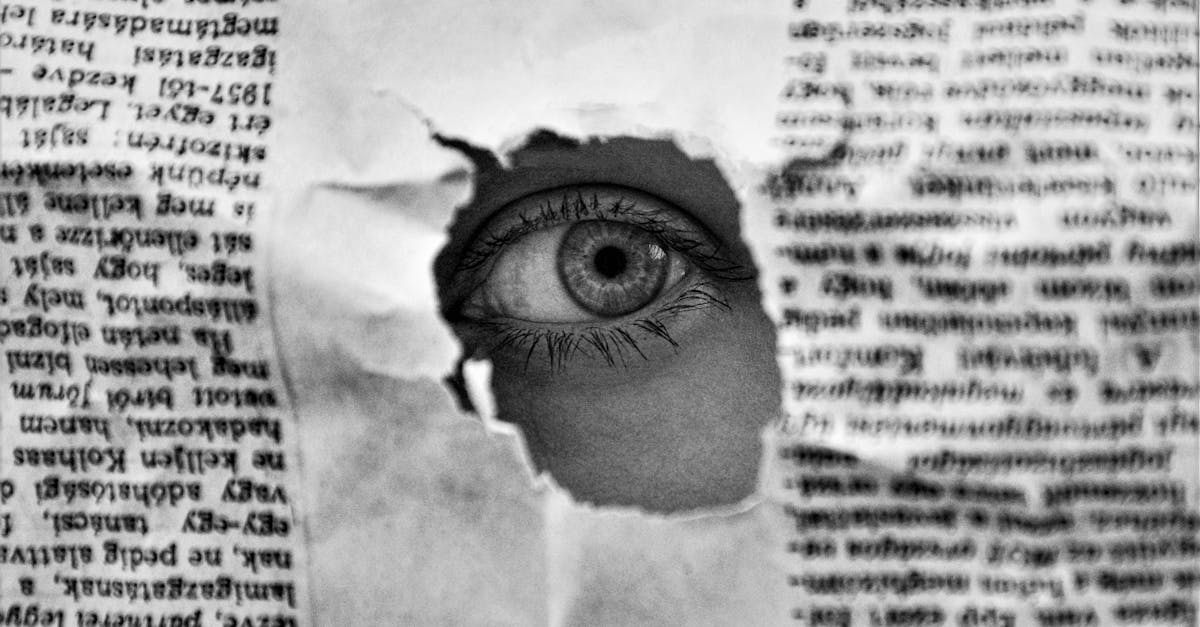

On one hand, the idea of a surveillance state is unsettling. It raises concerns about privacy, freedom, and the potential for abuse of power. But on the other hand, the prospect of China dominating the AI landscape is also a worrying one. It could mean that a single country has disproportionate control over the development and use of AI, which could have far-reaching consequences for global stability and security.

So, what does this mean for us? Is a surveillance state really the lesser of two evils? I’m not sure I agree with the Palantir CEO’s assessment, but it’s an important conversation to have. As AI continues to advance and play a larger role in our lives, we need to think carefully about how we want to balance individual rights with national security and economic interests.

Some of the key questions we should be asking ourselves include:

* What are the potential benefits and drawbacks of a surveillance state, and how can we mitigate the risks?

* How can we ensure that AI development is transparent, accountable, and aligned with human values?

* What role should governments, corporations, and individuals play in shaping the future of AI, and how can we work together to create a more equitable and secure world?

These are complex issues, and there are no easy answers. But by engaging in open and honest discussions, we can start to build a better understanding of the challenges and opportunities ahead, and work towards creating a future that is both safe and free.