I just stumbled upon an app that can convert any text into high-quality audio. It’s pretty cool. Whether you’re looking to listen to a blog post, a PDF, or even a photo of some text, this app can do it for you. The best part? It works with a variety of sources, including web pages, Substack and Medium articles, and more.

The app is designed with privacy in mind, so you don’t have to worry about it accessing your device without permission. It only asks for access when you choose to share files for audio conversion.

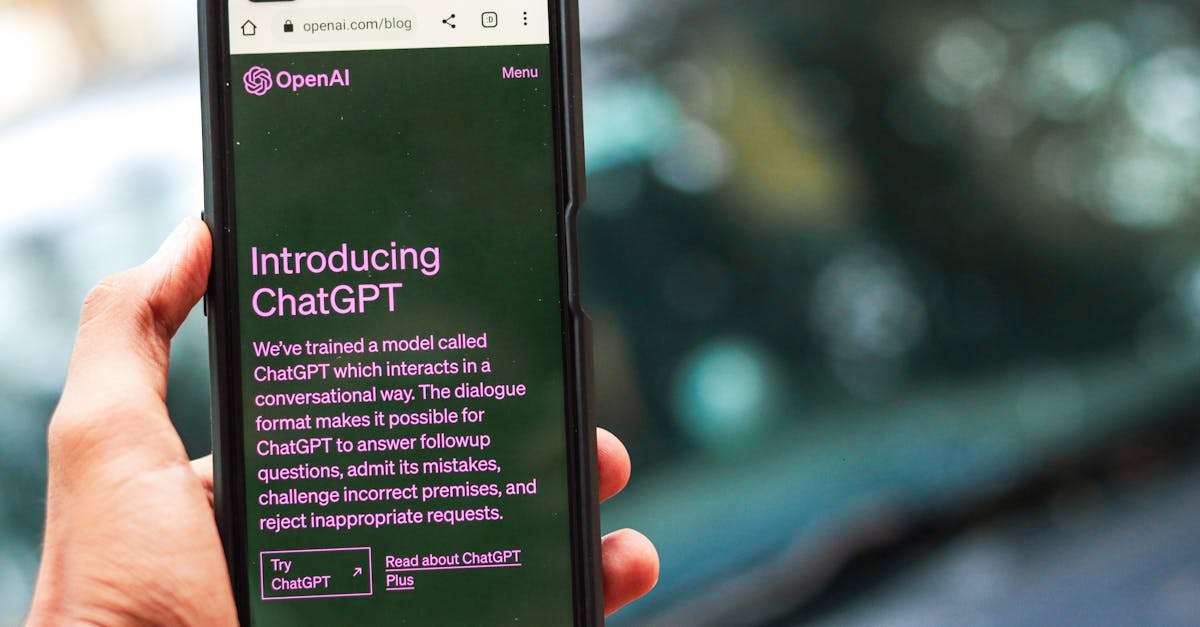

One of the most impressive features is the ability to take a photo of any text and have the app extract and read it aloud. This could be a game-changer for people who want to listen to text on-the-go.

The app is available for both iPhone and Android devices, and it’s completely free. If you’re interested in giving it a try, you can find the links to download it below.

So, what do you think? Would you use an app like this to convert text into audio? I’m definitely curious to see how it works and how people will use it.