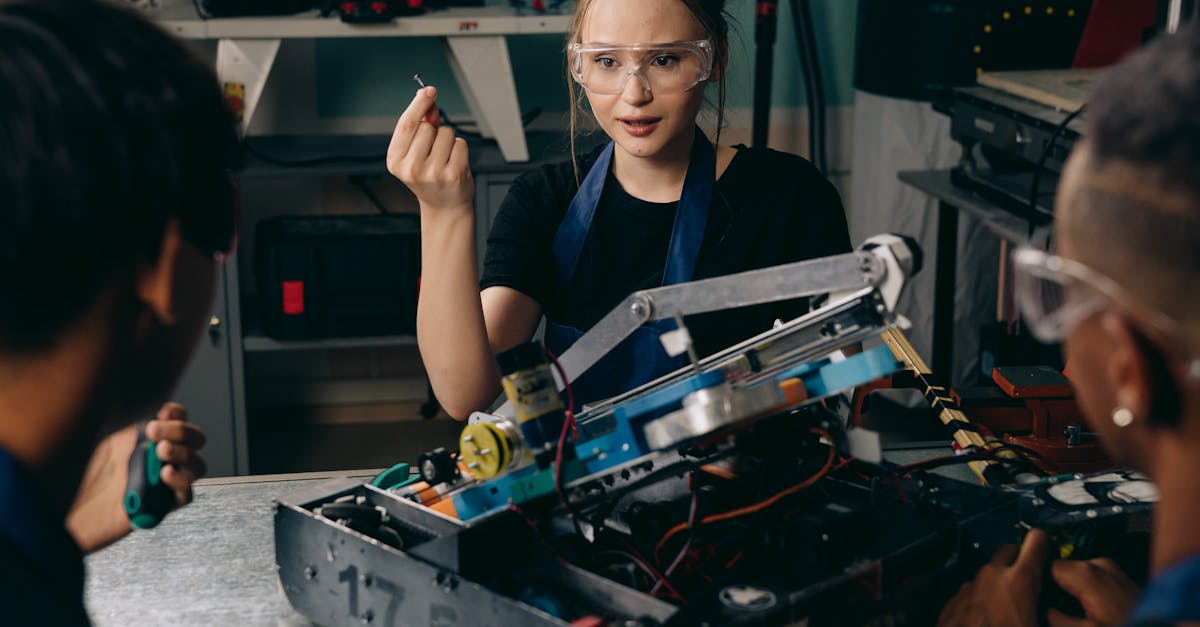

I’ve been digging into the world of machine learning, and I stumbled upon an interesting question: why aren’t TPUs (Tensor Processing Units) as well-known as GPUs (Graphics Processing Units)? It turns out that TPUs are actually designed specifically for machine learning tasks and are often cheaper than GPUs. So, what’s behind the lack of hype around TPUs and their creator, Google?

One reason might be that GPUs have been around for longer and have a more established reputation in the field of computer hardware. NVIDIA, in particular, has been a major player in the GPU market for years, and their products are widely used for both gaming and professional applications. As a result, GPUs have become synonymous with high-performance computing, while TPUs are still relatively new and mostly associated with Google’s internal projects.

Another factor could be the way TPUs are marketed and presented to the public. While Google has been using TPUs to power their own machine learning services, such as Google Cloud AI Platform, they haven’t been as aggressive in promoting TPUs as a consumer product. In contrast, NVIDIA has been actively pushing their GPUs as a solution for a wide range of applications, from gaming to professional video editing.

But here’s the thing: TPUs are actually really good at what they do. They’re designed to handle the specific demands of machine learning workloads, which often involve large amounts of data and complex computations. By optimizing for these tasks, TPUs can provide better performance and efficiency than GPUs in many cases.

So, why should you care about TPUs? Well, if you’re interested in machine learning or just want to stay up-to-date with the latest developments in the field, it’s worth keeping an eye on TPUs. As Google continues to develop and refine their TPU technology, we may see more innovative applications and use cases emerge.

In the end, it’s not necessarily a question of TPUs vs. GPUs, but rather a matter of understanding the strengths and weaknesses of each technology. By recognizing the unique advantages of TPUs, we can unlock new possibilities for machine learning and AI research.