I’ve been working with machine learning systems for a while now, and I’ve noticed a common problem. Models that look great on paper often fail in real-world production because they focus on correlations rather than causal mechanisms. This is a big deal, because if your model is just finding patterns in the data, it might not actually be able to predict what will happen in the future or make good decisions.

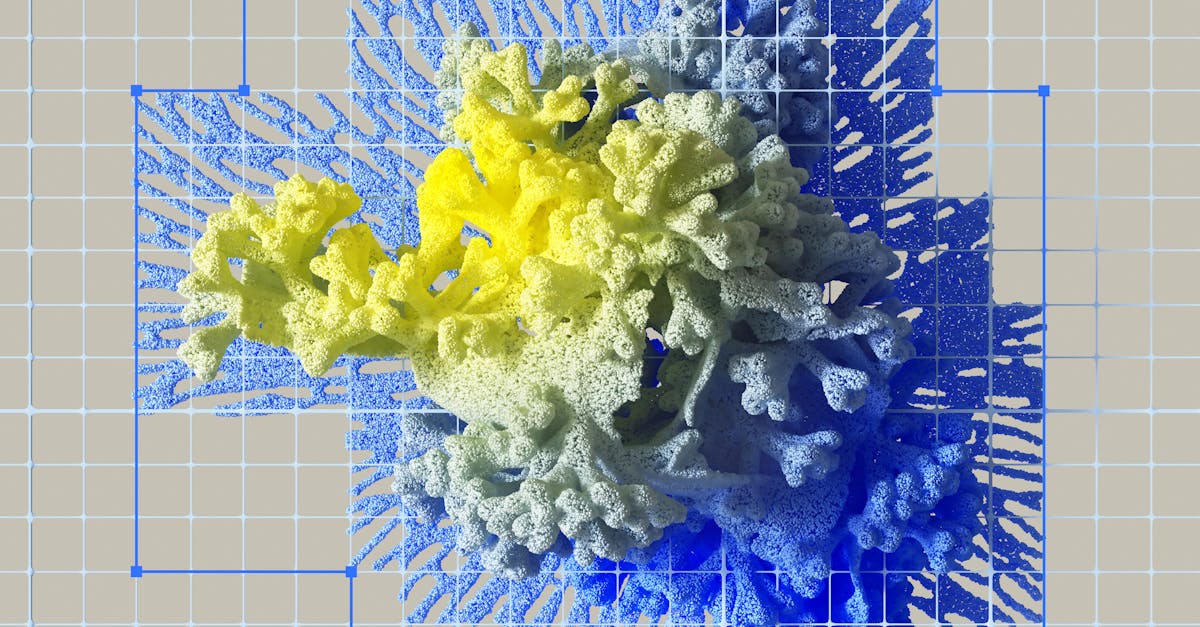

Let me give you an example. Imagine you’re building a model to diagnose plant diseases. Your model can predict the disease with 90% accuracy, but if it’s just looking at correlations, it might give you recommendations that actually make things worse. That’s because prediction isn’t the same as intervention. Just because your model can predict what’s happening doesn’t mean it knows how to fix it.

So, what’s the solution? It’s to build models that understand causality. This means looking at the underlying mechanisms that drive the data, rather than just the patterns in the data itself. It’s a harder problem, but it’s also a more important one.

I’ve been exploring this topic in a series of blog posts, where I dive into the details of building causal machine learning systems. I cover topics like Pearl’s Ladder of Causation, which is a framework for understanding the different levels of causality. I also look at practical implications, like when you need to use causal models and when correlation is enough.

One of the key insights from this work is that your model can be really good at predicting something, but still give you bad advice. That’s because prediction and intervention are different things. To build models that can actually make good decisions, you need to focus on causality.

If you’re interested in learning more, I’d recommend checking out my blog series. It’s a deep dive into the world of causal machine learning, but it’s also accessible to anyone who’s interested in the topic. And if you have any thoughts or questions, I’d love to hear them.