I’ve often wondered, what’s the typical background of someone who excels in Machine Learning? Do they usually come from a Software Engineering world, or is it a mix of different fields?

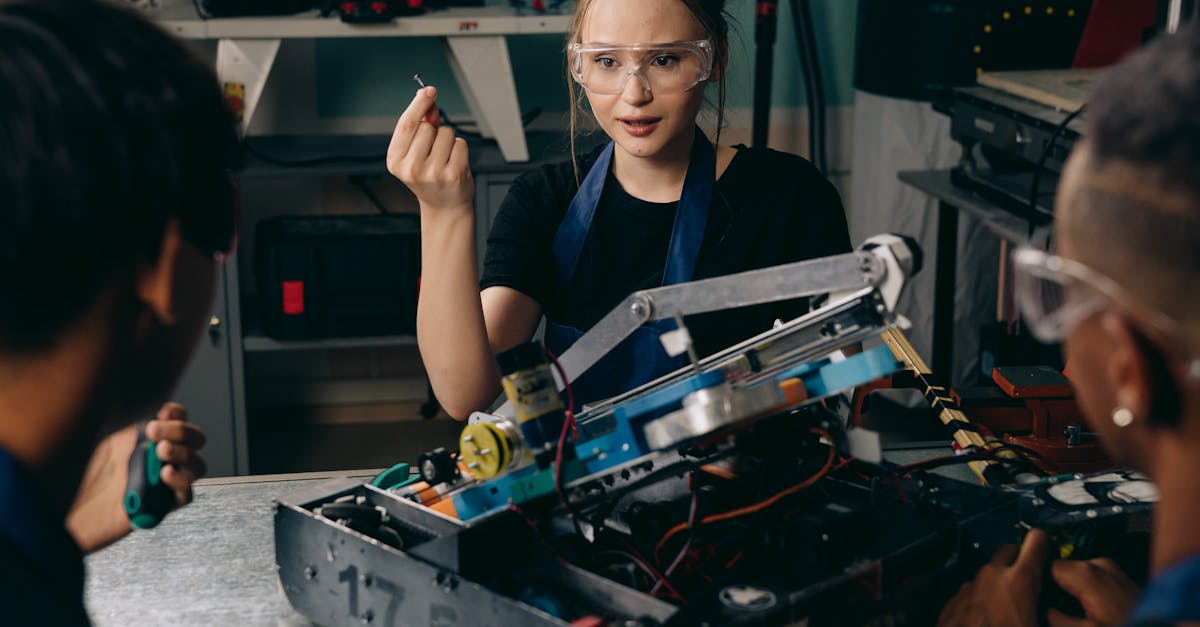

As I dug deeper, I found that many professionals in Machine Learning do have a strong foundation in Software Engineering. It makes sense, considering the amount of coding involved in building and training models. But, it’s not the only path.

Some people transition into Machine Learning from other areas like mathematics, statistics, or even domain-specific fields like biology or physics. What’s important is having a solid understanding of the underlying concepts, like linear algebra, calculus, and probability.

So, if you’re interested in Machine Learning but don’t have a Software Engineering background, don’t worry. You can still learn and excel in the field. It might take some extra effort to get up to speed with programming languages like Python or R, but it’s definitely possible.

On the other hand, if you’re a Software Engineer looking to get into Machine Learning, you’re already ahead of the game. Your coding skills will serve as a strong foundation, and you can focus on learning the Machine Learning concepts and frameworks.

Either way, it’s an exciting field to be in, with endless opportunities to learn and grow. What’s your background, and how did you get into Machine Learning? I’d love to hear your story.